While open data sources, such as PRONOM, Software Conversion Registry (CSR) and govdocs are excellent examples example of publishing re-usable data (to some extent) there is still a big problem with gaining access to other sources of data. This is mainly due to projects and organisations not focussing on re-usability of data, rather just their own internal aims. PRONOM and govdocs are great examples of where this is not the case but other valuable sources of data are disappearing due to many of the wrong reasons. At the other end of the scale the currently published datasets are missing valuable information relating to their context, version and provenance.

Jeni Tennison puts some of the issues very succinctly:

If this statement is that obvious, why are so many in the preservation community constantly changing their minds…

In the preservation community, the current state of the art is PRONOM, thanks to Tim Gollins. PRONOM had been expanding, changing and becoming unmanageable, exactly what Jeni is warning about in the above statement. By reducing the vocabulary of PRONOM, the footprint of the service was significantly reduced. As a benefit however, the data quality increased as low quality, low availability data was deleted. Basically here a decision was made.

Further to the smaller footprint, time could be invested in opening up the data in more forms than simply XML and a highly custom web interface. Rather than inventing a new custom API and interface, the TNA decided to follow the W3C’s recommendations on publishing 21st century Linked Open Data (W3C Design Issues – Linked Data). As it turns out this step wasn’t too hard and provided some instant opportunities to expand PRONOM a little in order to “LINK” it to other sources of data.

So what is the problem?

- Not enough data sets are available as 20th century open data (let alone 21st century). There need to be more high quality, small and easy to maintain datasets.

- Of the 21st century linked dataset, very few are maintained with full provenance information (not that they were before).

It is the 2nd point that is particularly relevant when it comes to analysing risk related to changes. This is also a problem not just for the preservation community but also in the wider area of web and semantic web research. Indeed the problem of provenance information is known to this community (http://www.jenitennison.com/blog/node/141), who we appear not to be working very closely with.

A Way Forward

Data is the key to applications working, identification tools for example. So why do we need historical data relating to when things change and what changes?

QUESTION: When I identified this file it said it was a PDF, do I need to re-identify it to make sure the tool was correct?

ANSWER: You could re-scan everything, or look at the historical identification information to see if such identifications have ever changed and why. The current identification could be the wrong one!

Without provenance information and historical data, preservation policies are very difficult to review as it is not clear why decisions were made. This is a problem, but can be solved.

As part of the SCAPE/OPF/University of Southampton work, the LDS3 Specification for managing fully provenance aware datasets was constructed. This specification was then implemented in order to be a publication platform for digital preservation data and also a potential way of solving the PRONOM problem with provenance information.

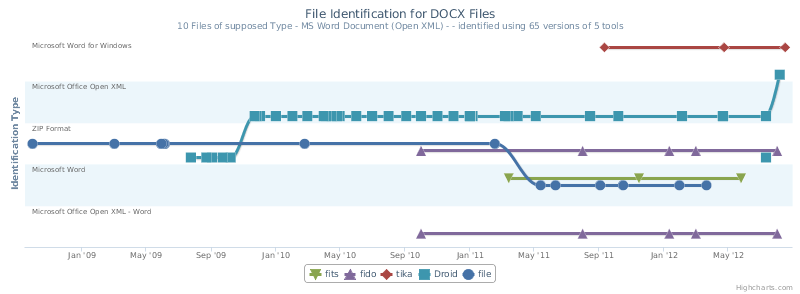

One of the first datasets to be ingested related to historical identification data of a number of the govdocs files, thus people can ask queries about a particular version of an identification tool over time and find out what result was output at the time. While this is quite boring, it is the resulting format profile graphs that are of interest and show how identification results have changed, sometimes in significant ways, as shown below:

This chart shows the identification results for 65 versions of 5 tools (fits, fido, tika, droid and file) over a period of 4 years. The files being scanned are all believed to be DOCX files (according to the ground truth) but here we can see some tools identifying the files a ZIP files, while others identify the mime type as DOC.

This is an early result, but from the number of changes in the graph (see the blue and purple lines) we can clearly see that there is a need to know such data in order to analyse risk in the preservation community. Further all this data is going to be openly available.

Where From Here

How do we get people to create more high quality and maintainable preservation datasets, do they even exist? where? and how do we get at them? In the most part these are not technological problems. What is the business of open datasets (http://www.jenitennison.com/blog/node/172)

There is still work to be done with the WIDER community on how to enable clear discovery of current and historical data. Further can we dynamically query historical data using protocols such as MEMENTO?

Within the preservation community, how do we build provenance aware services for users? Where do these fit into the current situations? Do they scale?

Proof of concepts are coming along however integrated platforms are still more silo’s than integrated solutions, this is a problem.

Update – 31/08/2012

Updated the graph to reflect fixes to the code. Now we can see that fido in particular has a problem with these 10 files as it identifies them as not only the *wrong* format, but as one of two different formats. More to come here.

Update – 3/10/2012

Publication and slides given at iPres2012:

Tarrant, David and Carr, Leslie (2012) LDS3: Applying Digital Preservation Principals to Linked Data Systems. In, Ninth International Conference on Digital Preservation (iPres2012), Toronto, Canada