Automated identification of corresponding images and duplicate removal is a challenging task due to inconsistent quality of digitized book collections. Validating such collections based on qualitative criteria is a challenging endeavor according to the sheer amount of data that has to be processed. Traditional approaches seem to have peaked at a certain level. Scientists at the Austrian Institute of Technology developed a generalized method providing detailed comparison of corresponding images even on historical or handwritten texts

by Alexander Schindler and Reinhold Huber-Mörk

National libraries maintain huge archives of artifacts documenting a country’s cultural, political and social history. Providing public access to valuable historic documents is often constrained by the paramount task of long-term preservation. Digitization is a mean that improves accessibility while protecting the original artifacts. Assembling and maintaining such archives of digitized book collections is a complex task. It is common that different versions of image collections with identical or near-identical content exist. Due to storage requirements it might not be possible to keep all derivate image collections. Thus the problem is to assess the quality of repeated acquisitions (e.g. digitization of documents with different equipment, settings, lighting) or different downloads of image collections that have been post processed (e.g. denoising, compression, rescaling, cropping, etc.). A single national library produces millions of digitized pages a year. Manually cross-checking the different derivates is an almost impossible endeavor.

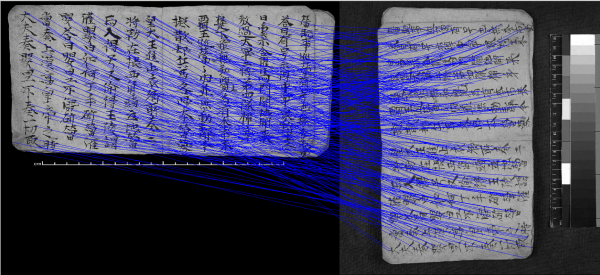

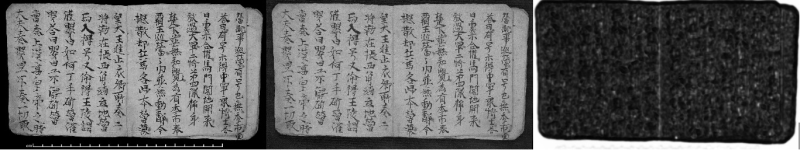

Traditionally this problem was approached by using Optical Character Recognition (OCR) to compare the textual content of the digitized documents. OCR, though, is prone to many errors and highly dependent on the quality of digitized images. Different fonts, text orientations, page layouts or insufficient preprocessing complicate the process of text recognition. It also fails on handwritten and especially foreign, non-western texts. Pixel-wise comparison using digital image processing is only possible as long as no operations changing the image geometry, e.g. cropping, scaling or rotating the image, were applied. Furthermore, in cases of filtering as well as color or tone modifications the information at pixel level might differ significantly, although the image content is well preserved (see Figure 1).

Scientists of the Safety and Security Department at the Austrian Institute of Technology (AIT) developed a method to efficiently detect corresponding images between different image collections as well as to assess their quality. By using inter point detection and derivation of local feature descriptors, which have proven highly invariant to geometrical, brightness and color distortions, they are able to successfully process even problematic documents (e.g. handwritten books, ancient Chinese texts).

Scale-invariant Feature Transform (SIFT) is an algorithm widely used in digital image processing to detect descriptive local features of images that can be used to identify corresponding points in different images. Such points are generally located at high-contrast regions. Thus, this approach is ideally for document processing because high contrast is a property of readability. In order to be able to identify the correspondence of images between two different collections without reference to any metadata information, a comparison based on a visual dictionary, i.e. a Visual Bag of Words (BoW) approach is suggested. Following this approach a collection is represented as a set of characteristic image regions. Ignoring the spatial structure of these so called visual words, only their sole appearance is used to construct a visual dictionary. Using all descriptors of a collection the most common ones are select as a representative bag of visual words. Each image in both collections is then characterized by a very compact representation, i.e. a visual histogram counting the frequencies of visual words in the image. By means of such histograms different collections can be evaluated for corresponding, duplicate or missing images. A further option is the detection of duplicates within the same collection, with the exception that a visual dictionary specific to this collection is constructed beforehand. It is worth to mention that the Visual BoW is related to the BoW model in natural language processing, where the bag is constructed from real words.

Having solved the image correspondence problem using the visual BoW, a detailed comparison of image pairs, i.e. an all-pairs problem with respect to all pairs of possibly matching descriptors between two images, is performed. Corresponding images are processed using descriptor matching, affine transformation estimation, image warping and computation of the structural similarity between the images.

First results based on a dataset of 20 books with approximately 500 pages each showed high robustness with respect to geometric transformation between images and change of file format and color information. Future activities will include application of the method to large scale data sets employing the SCAPE platform, an open source platform that orchestrates semi-automated workflows for large-scale, heterogeneous collections of complex digital objects.

This work is part of the SCAlable Preservation Environments (SCAPE) project which aims at developing scalable services for planning and execution of institutional digital preservation strategies. The SCAPE project is co-funded by the European Union under FP7 ICT-2009.4.1 (Grant Agreement number 270137).